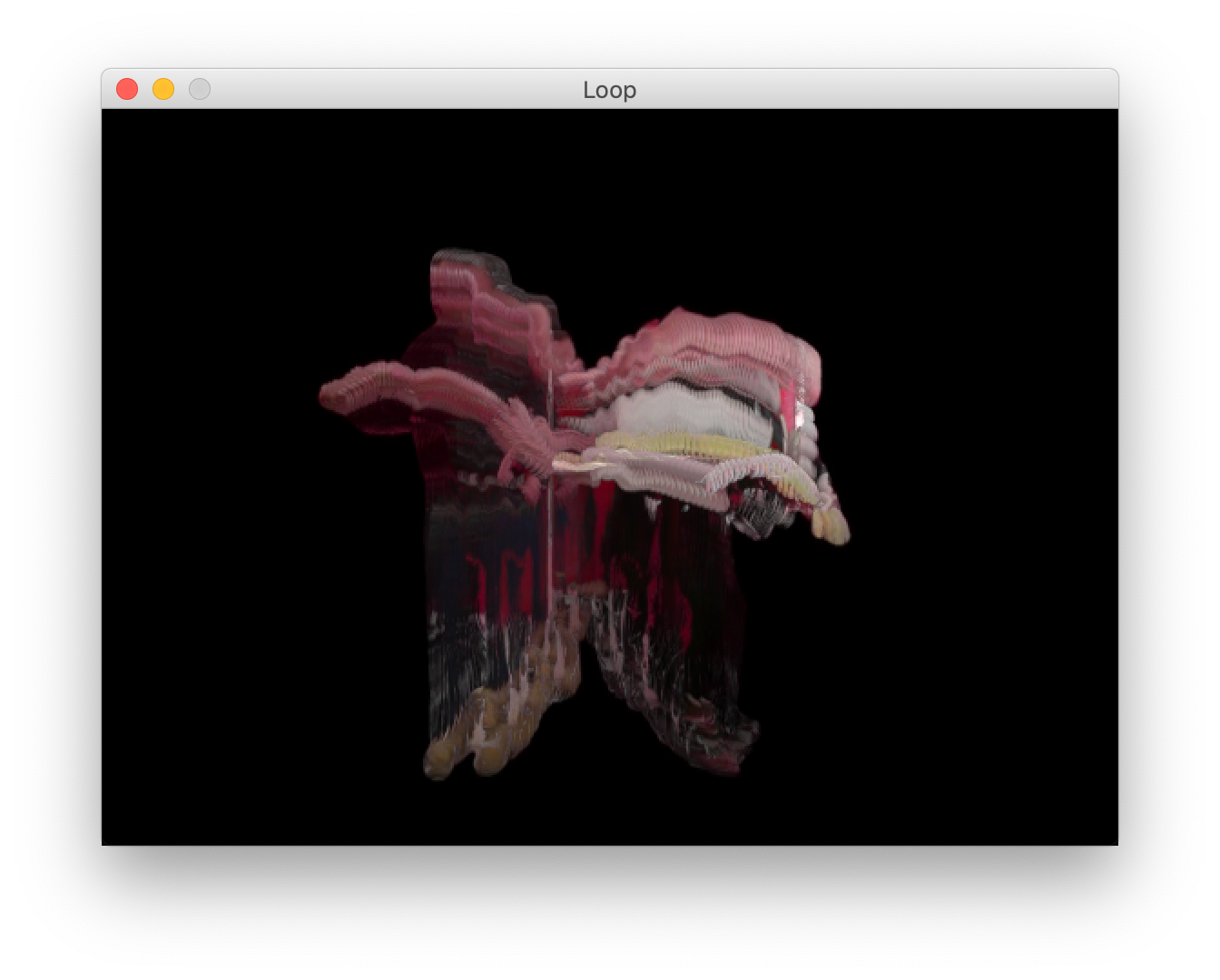

Black Mamba’s Revenge by Brendan Dawes uses AI to visualise the final fight scene in Kill Bill Vol. One

Using pixel sampling techniques together with AI to visualise the final fight scene from Quentin Tarantino's Kill Bill Vol. One. Dawes' work really mirrors the dance like quality of the scene theres moments of flow followed by moments of frantic.

I was interested in this work as it being a depiction of movement in time and isolating people in a scene. The documentation of the events from one point to another is something I considered when thinking of ways of dissecting film. I was still unsure exactly what I wanted to gain from this exploration but thought the idea of isolating certain elements would work well.

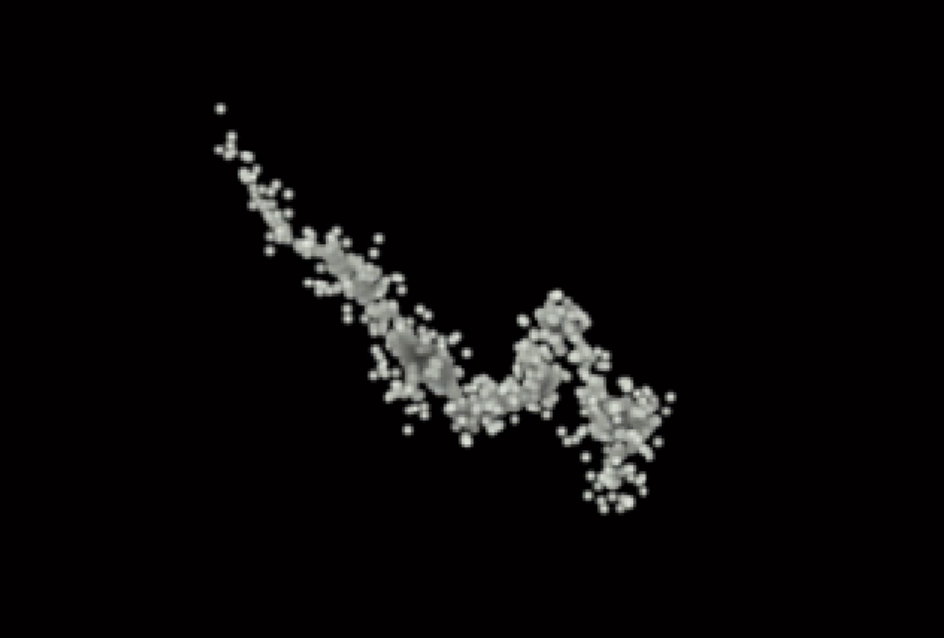

I adapted a processing example BackgroundSubtraction from the OpenCV library. I adapted it to format my video below. Here I was just experimenting with different ways I could manipulate technology on seeing movement. The video is of my fish tank as an example containing a lot of movement. It was an interesting way to view movement and I like the idea of only being able to see the subject when it moved and the abstract shapes of movement.

beginning to breakdown certain events occurring throughout film helped me gain an understanding into altering the way i view a moving image. By dissecting every choice, natural or synthetic we are given vast amounts of data. But what does it mean? Is it useful? what does it tell us?

When watching Kubrick's The Shining again for the hundredth time I began to think about movement as a central focus in his stationary one point perspective shots. The speed of the movement, the size of the character in the frame and the general shape can tell a viewer a lot about the scene itself.

I looked to emulate these movements and isolate them from the scene itself so I began to use the tracking option function on Adobe After Effects to follow these patterns. By using simple shapes and colours first off to make sure it was emulating the subjects movement.

At first I familiarised myself with motion tracking in AE and did some small experiments with blocks of colour to see the movement clearly.

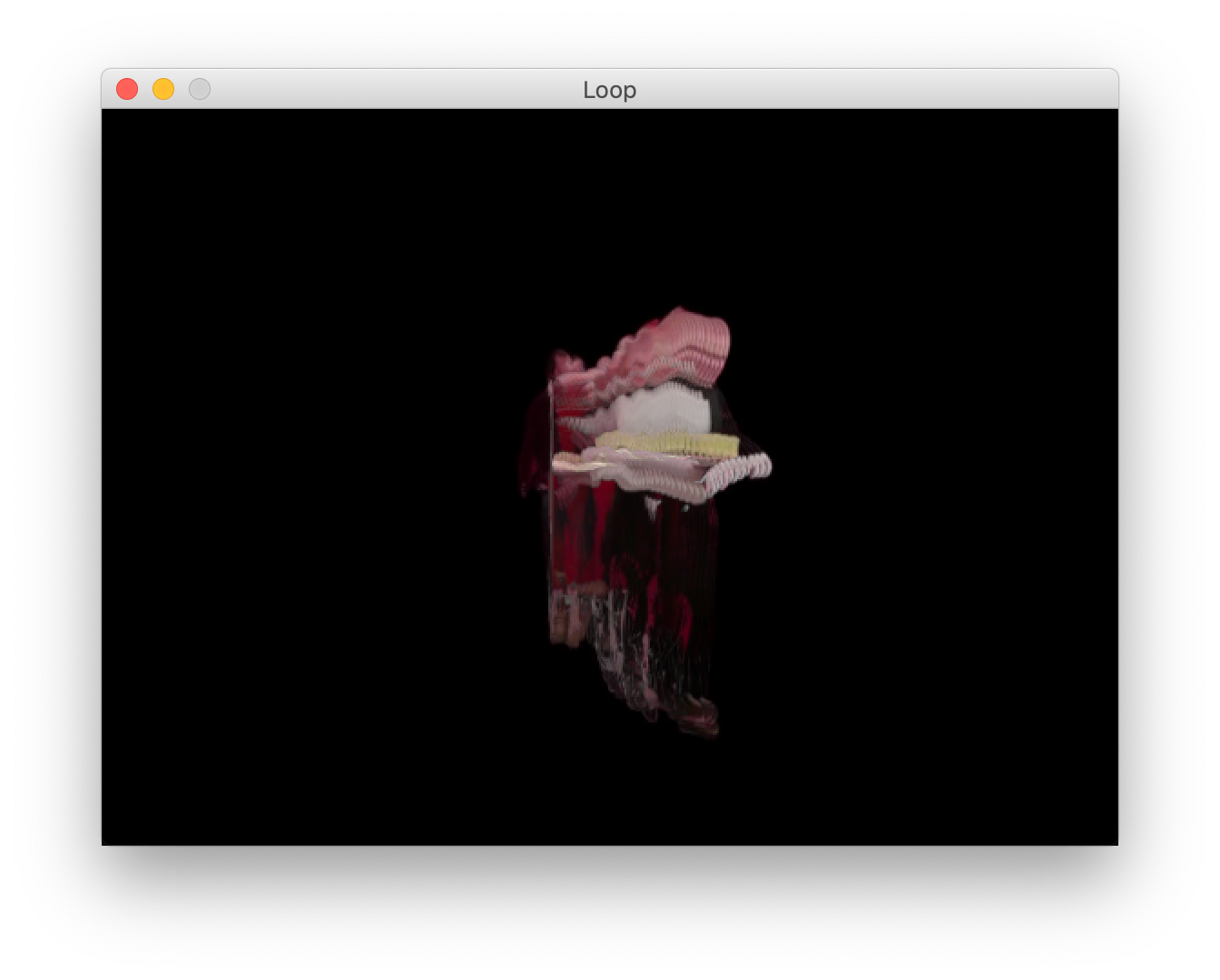

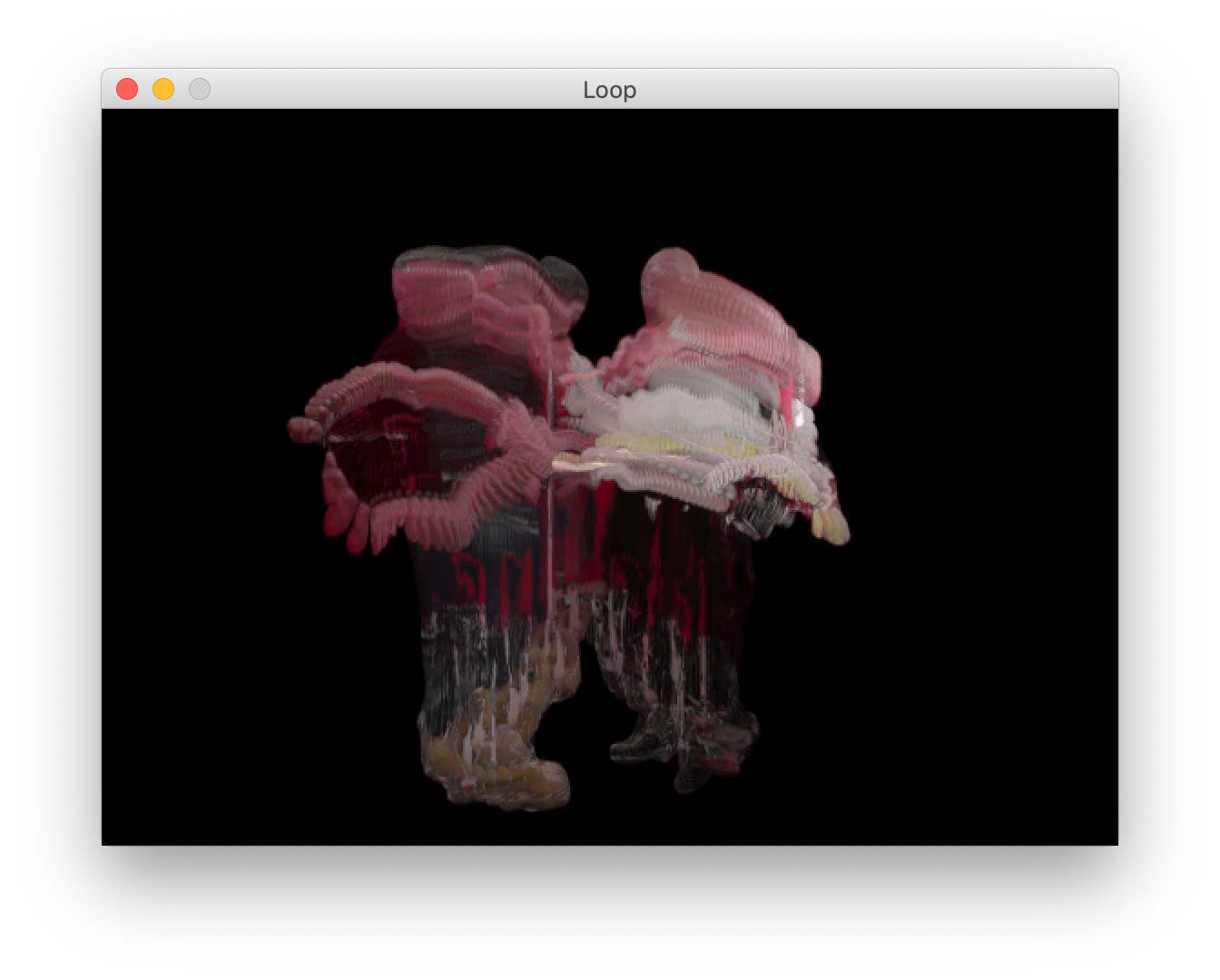

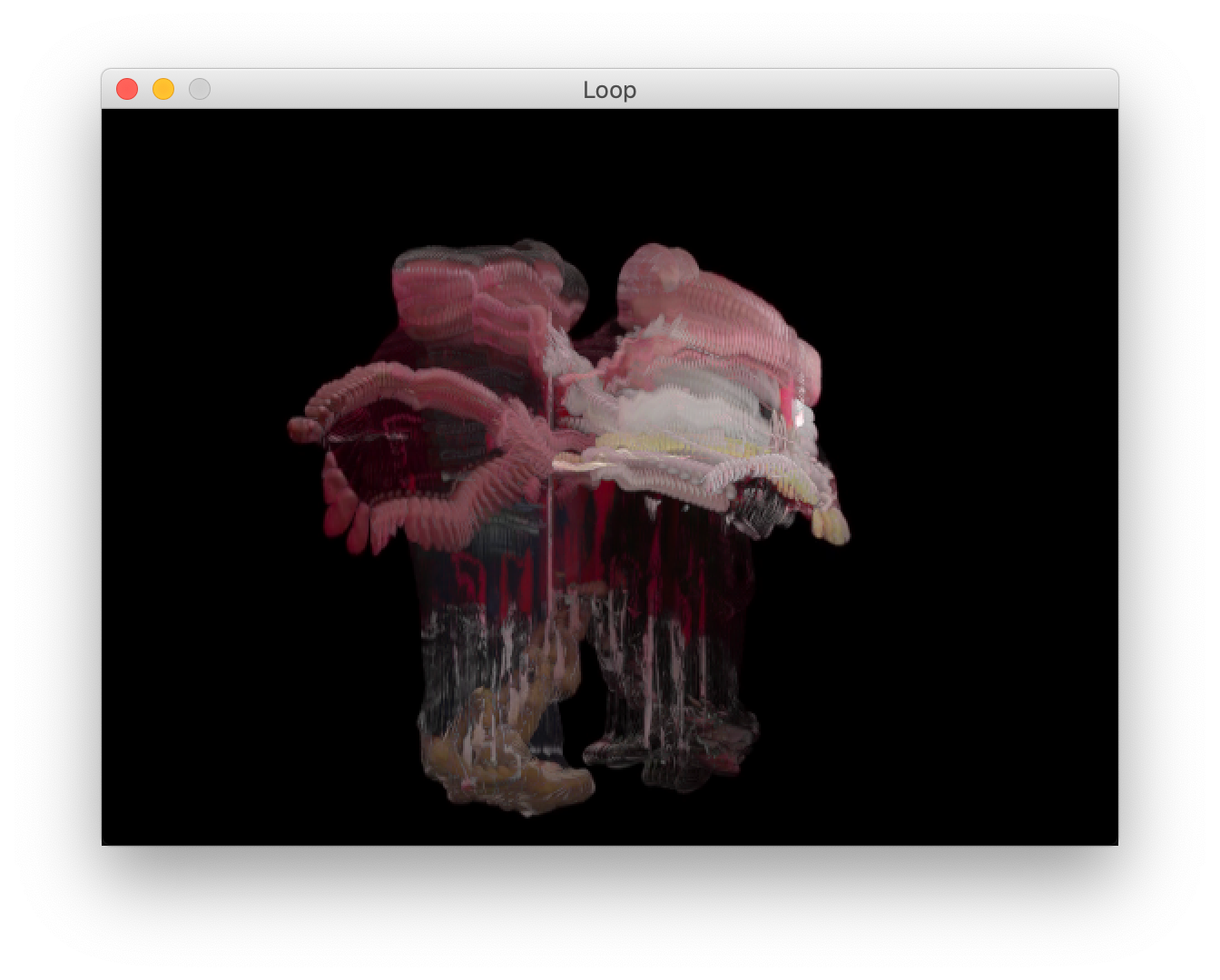

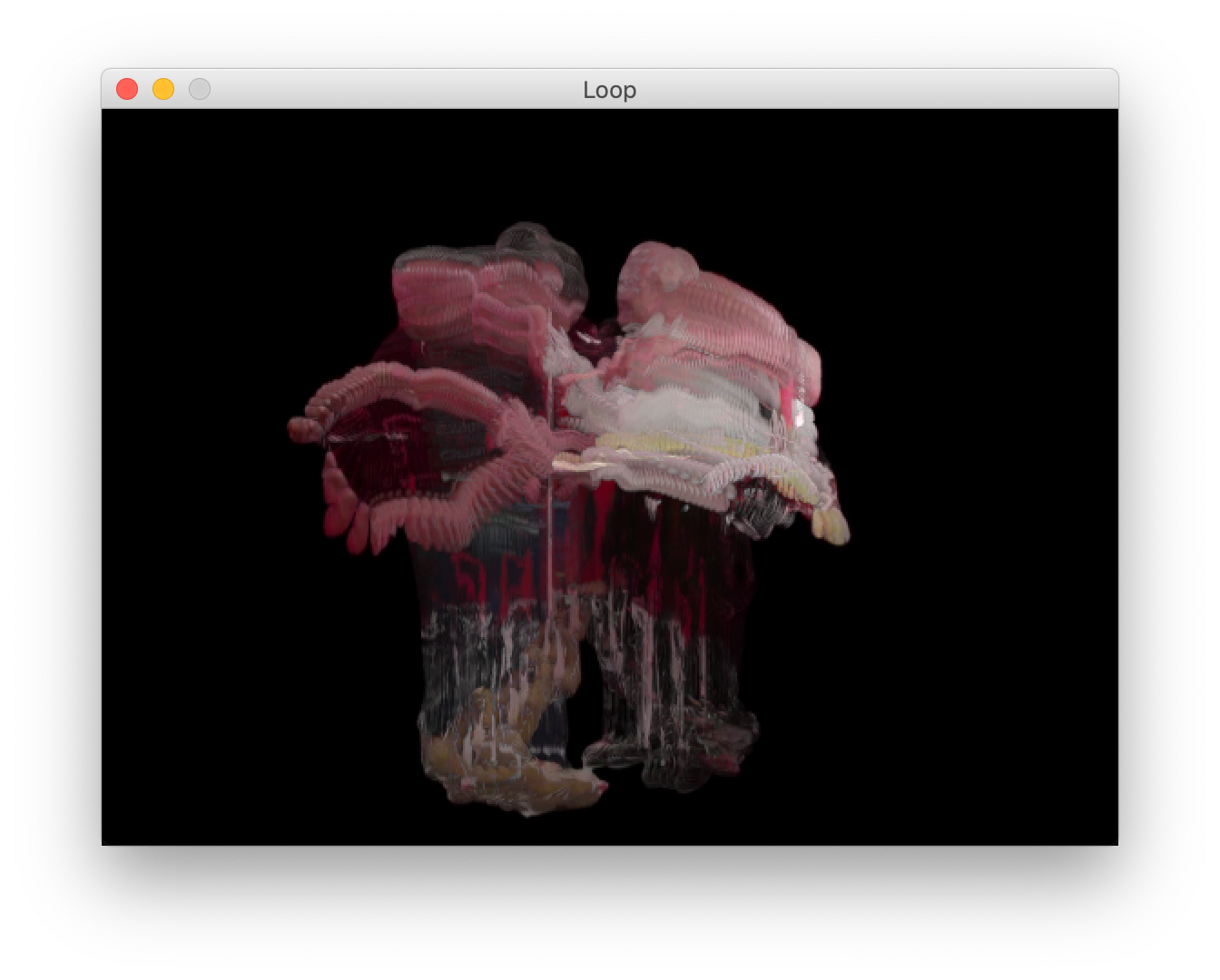

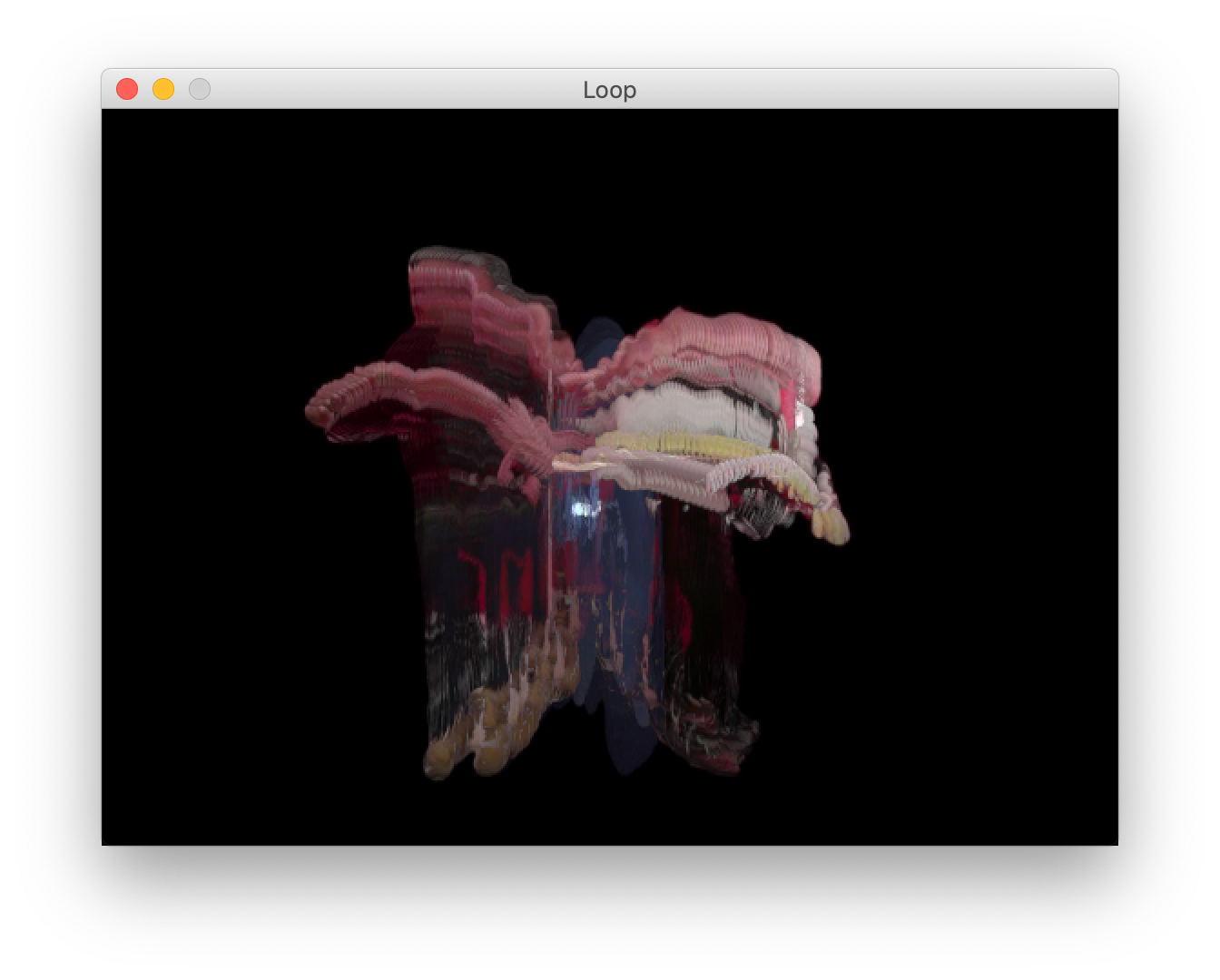

Once I had managed to gain some form of tracking data I isolated the points of movement and began to add lines and shapes to create a more abstract visual. I ended up discovering a particle effect through a Maxon plugin. It gave me the tools to create the flowing movement I was trying to recreate by leaving a shadow of the previous movement, like a delay of sorts.

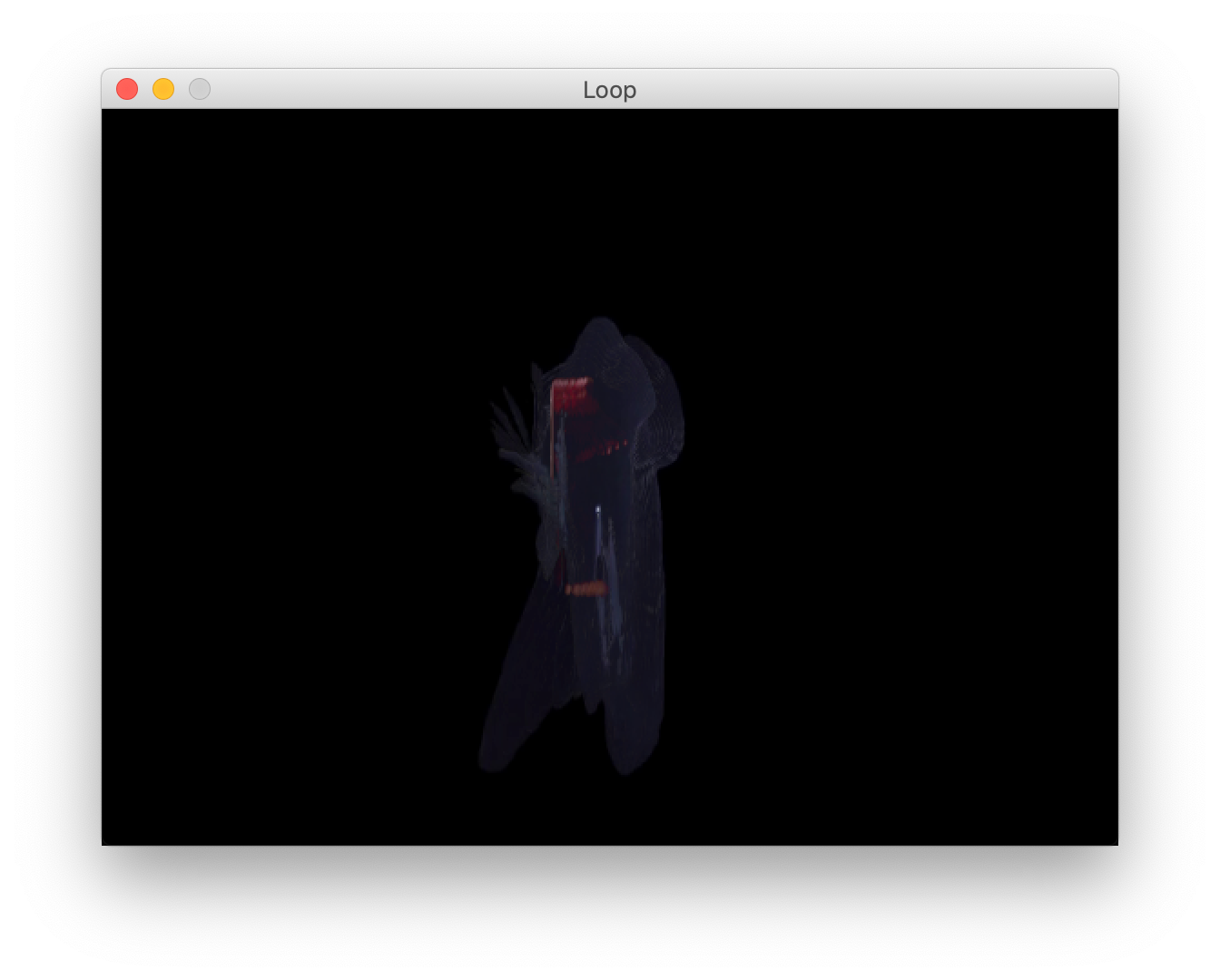

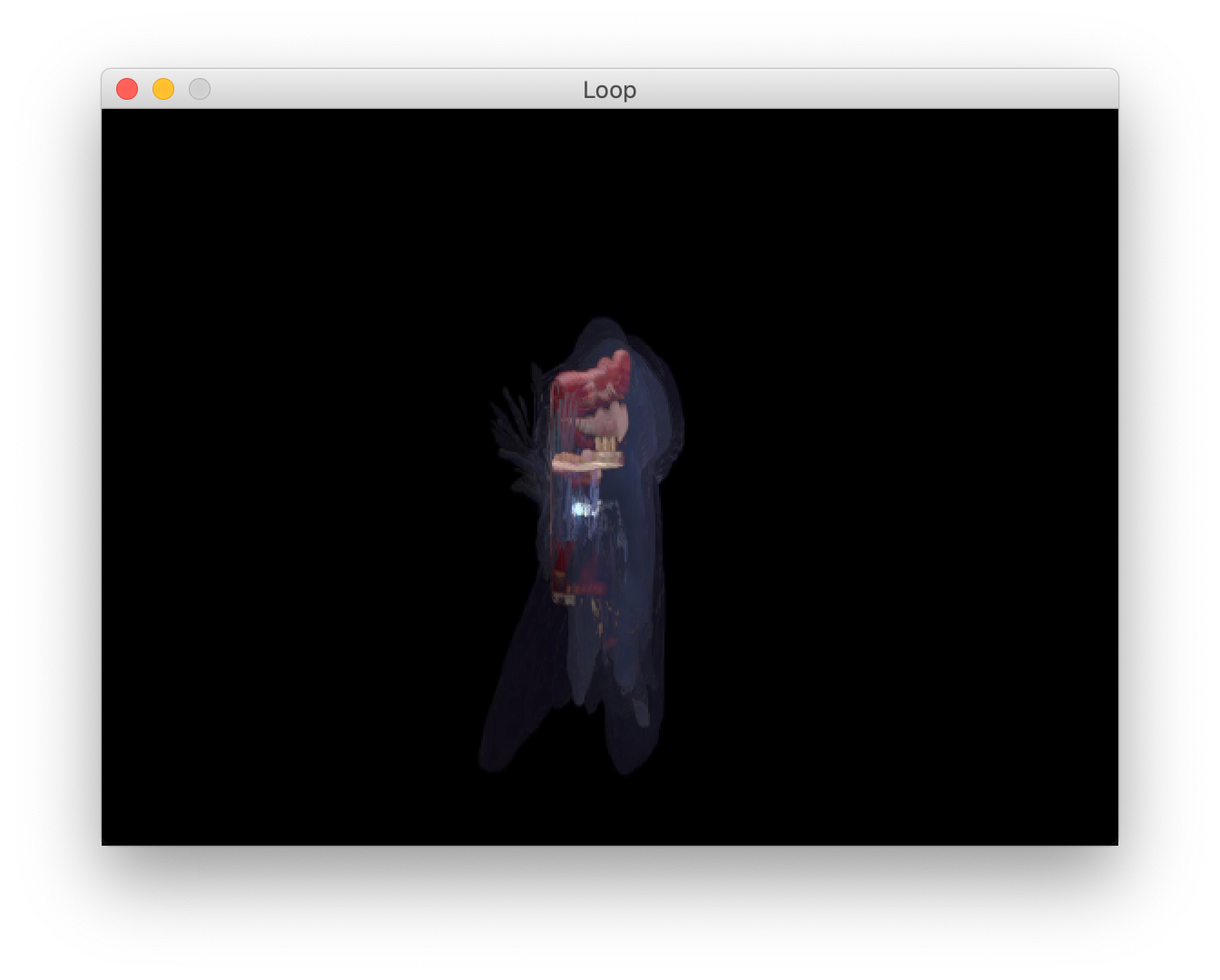

I began thinking about movement in Kubrick's one point perspective shots and how the character is such a focal point in these moments. Their movements and actions are important. What would happen if we isolated these movements and layered them or focussed on the surroundings of the focal point. We would be left with something very different.

Below is a brief example of this working of the scene of Jack Torrance walking down the hallway to the ball room in The Shining. I'm unsure what I really achieved by doing so but I enjoyed the process and analysis. Breaking something down until it's unrecognisable and questioning if it can be built back into another thing is an interesting route to consider.

I achieved this by using Red Giants TrapCode demo. The particle effects create these 3D appearing visuals of movement. Attaching particles to the motion tracker churned out a nice experiment.

Jack Walking In Hallway particles experiment on Adobe After Effects

I liked this outcome but it was a hassle to get to this point and took up a fair bit of my time dealing with logins and contacting Red Giants support staff. After my tutorial with Gillian where we discussed some issues I was having with After Effects I felt like I had to try and get a handle on what I wanted to achieve. I’d been a bit deflated in terms of my work flow and I was finding it difficult to get the ball rolling on my experiments - it didn’t help I was running into problems with using the video library on the system my mac was running and adobe suite issues. I felt everything I was attempting was underwhelming and not really what I was hoping to achieve. I understood that I had to try and push myself with realising concepts and executing them and how to execute my ideas to an audience.

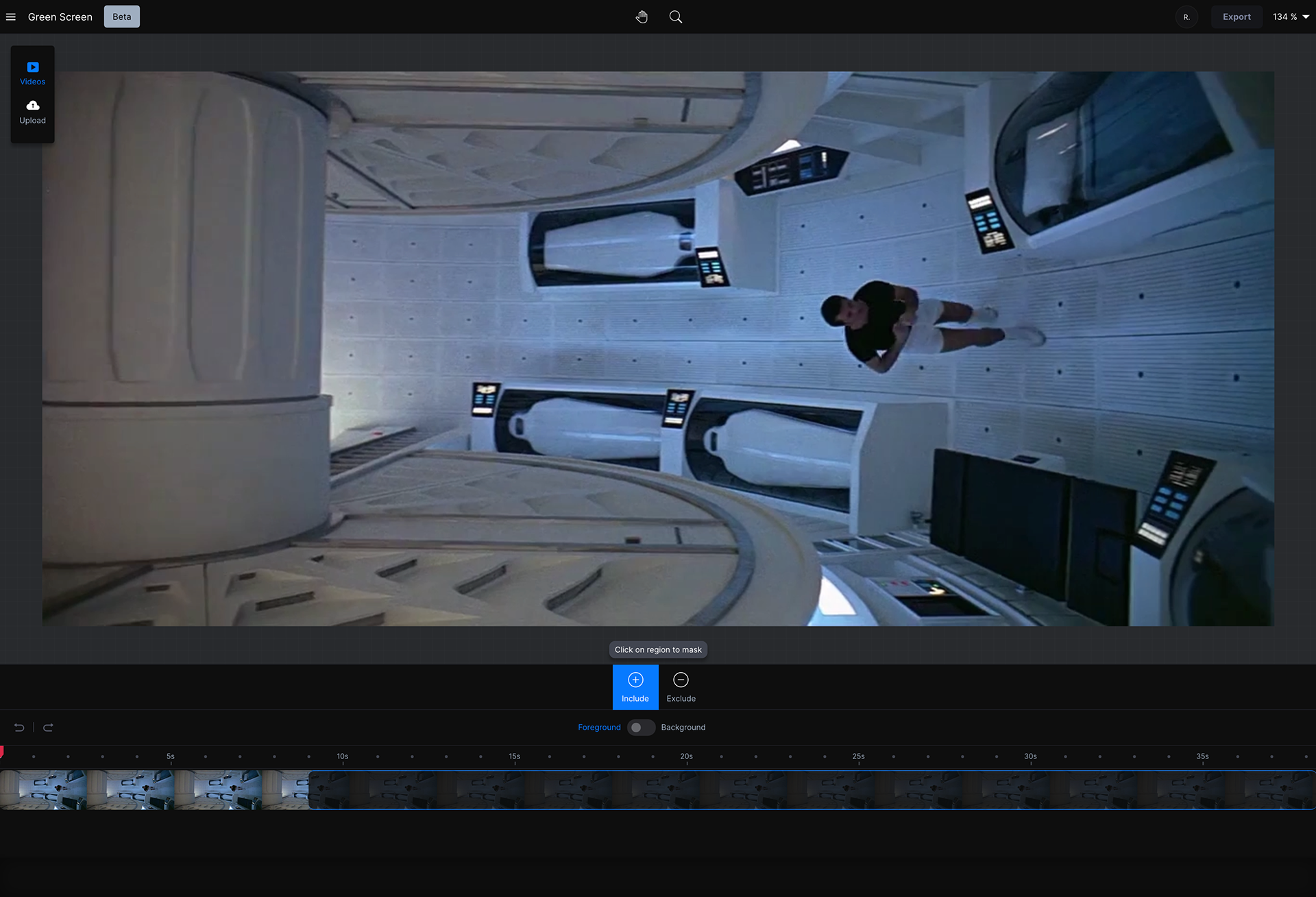

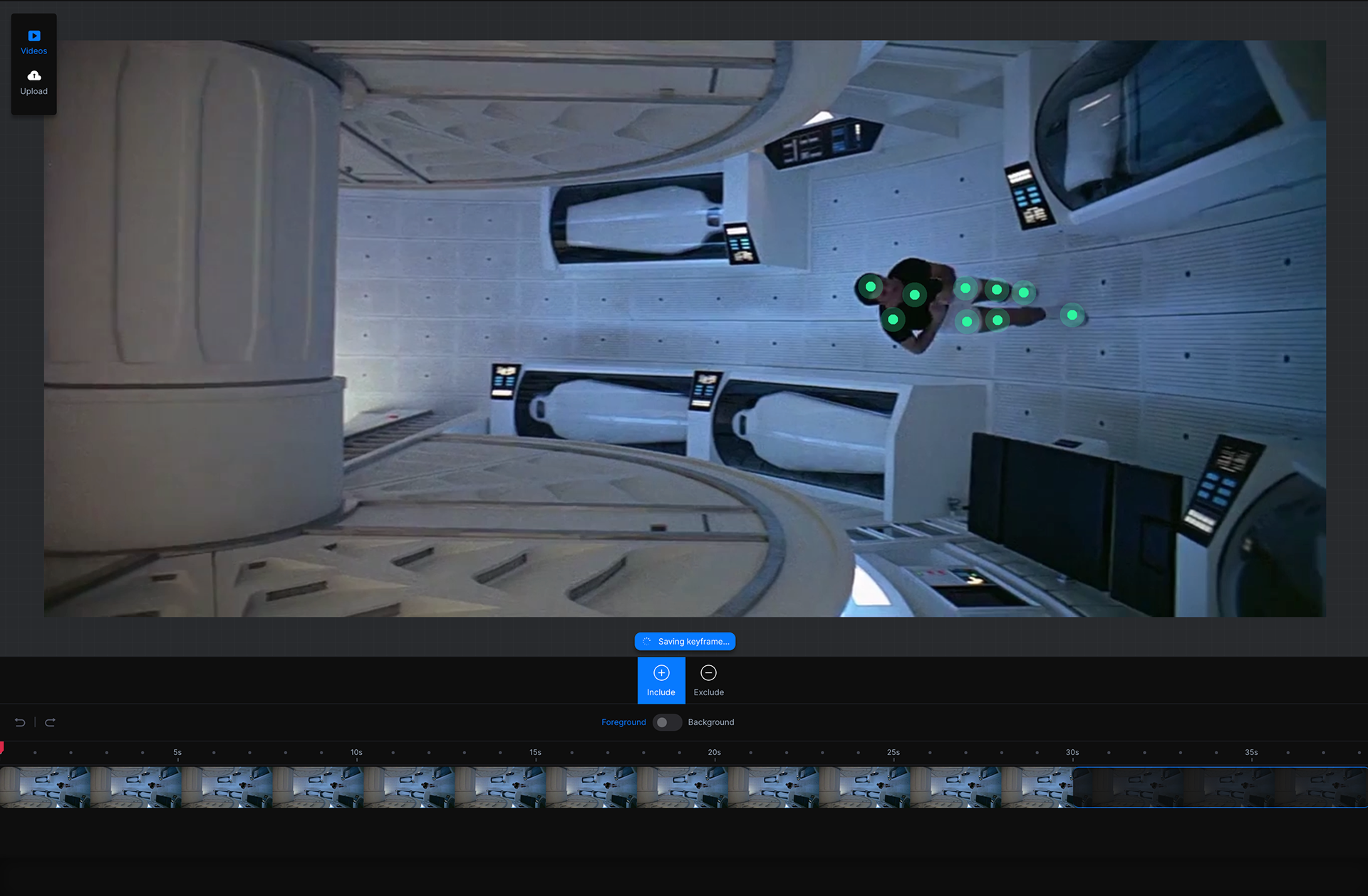

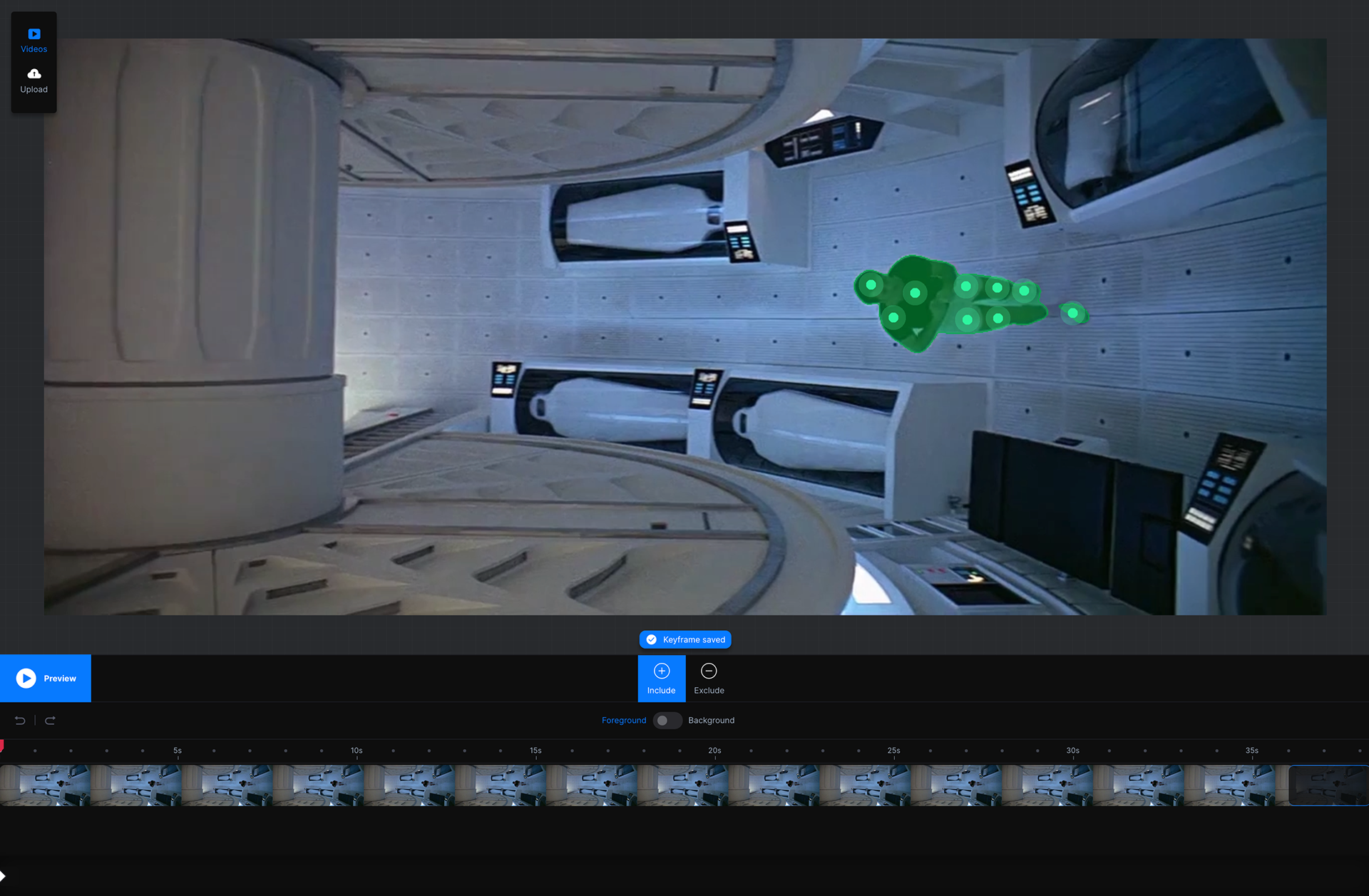

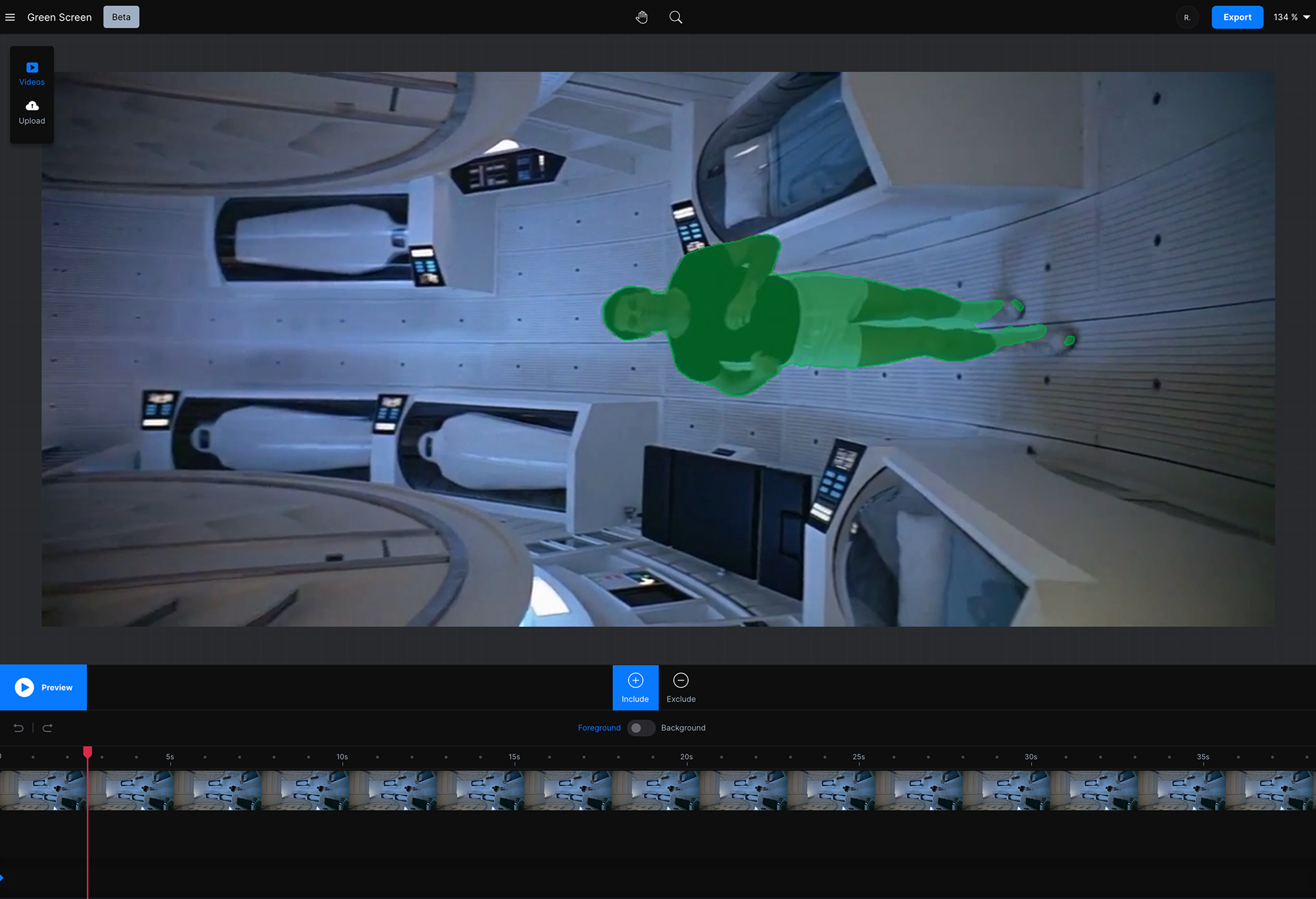

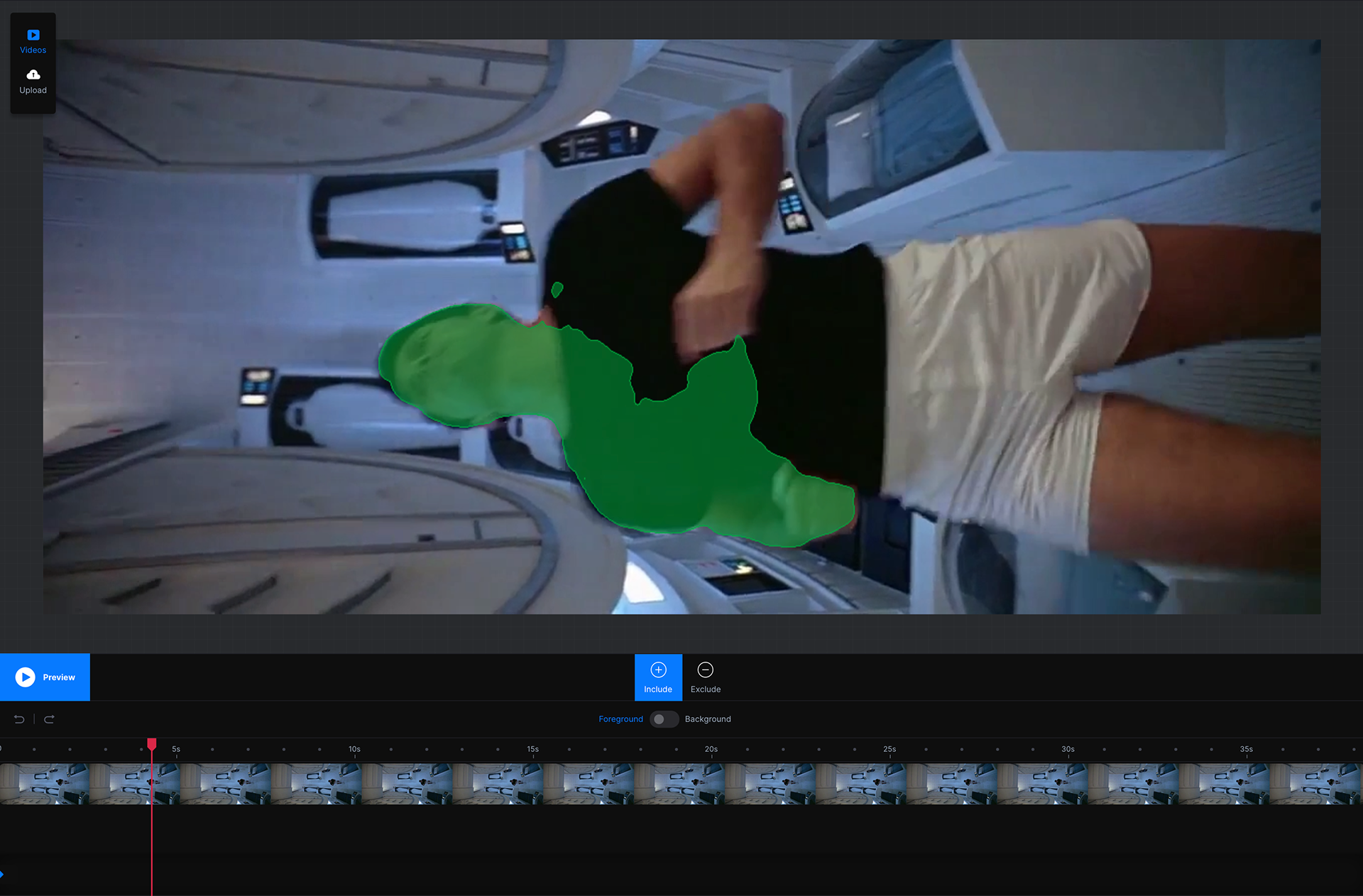

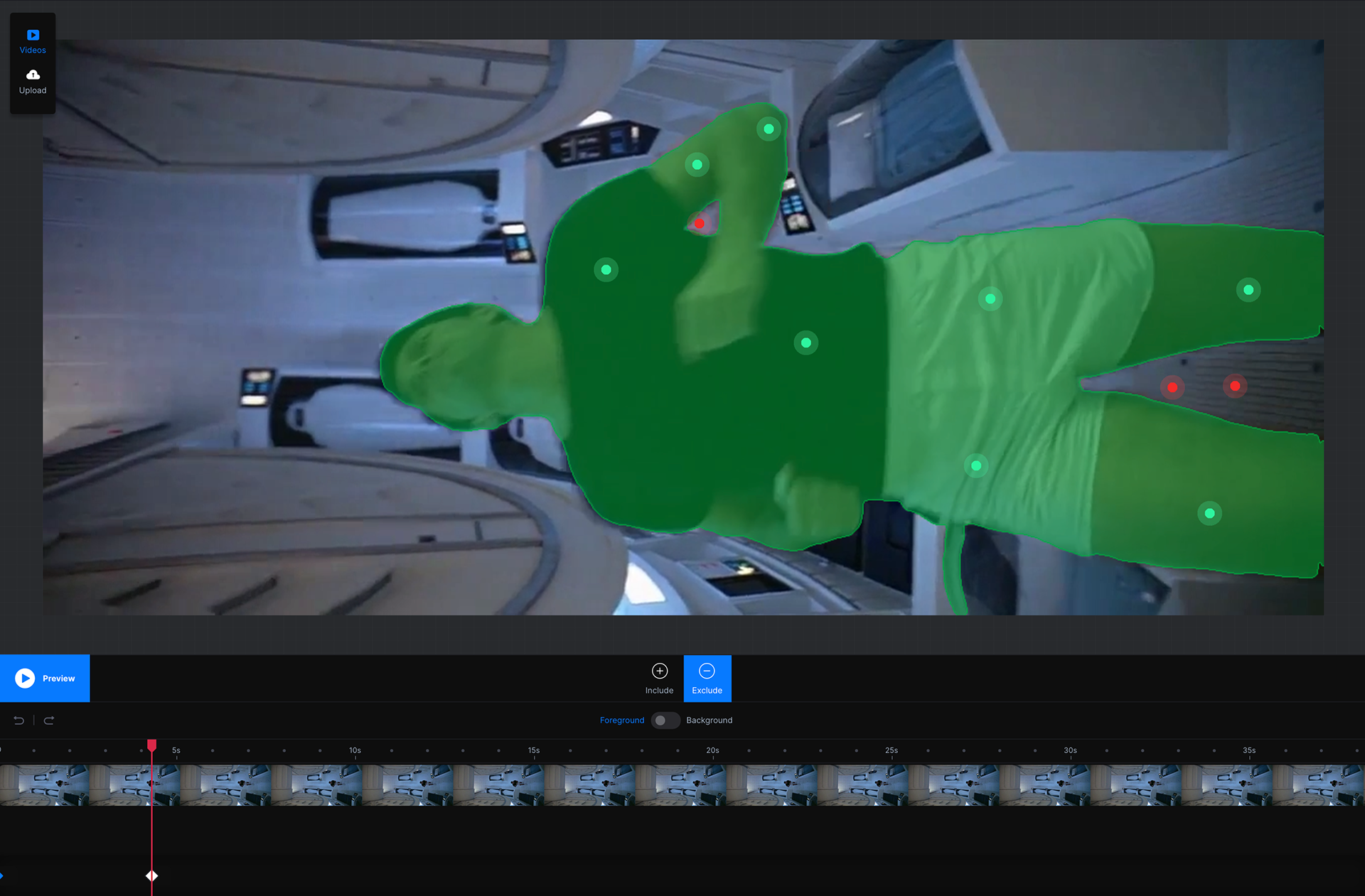

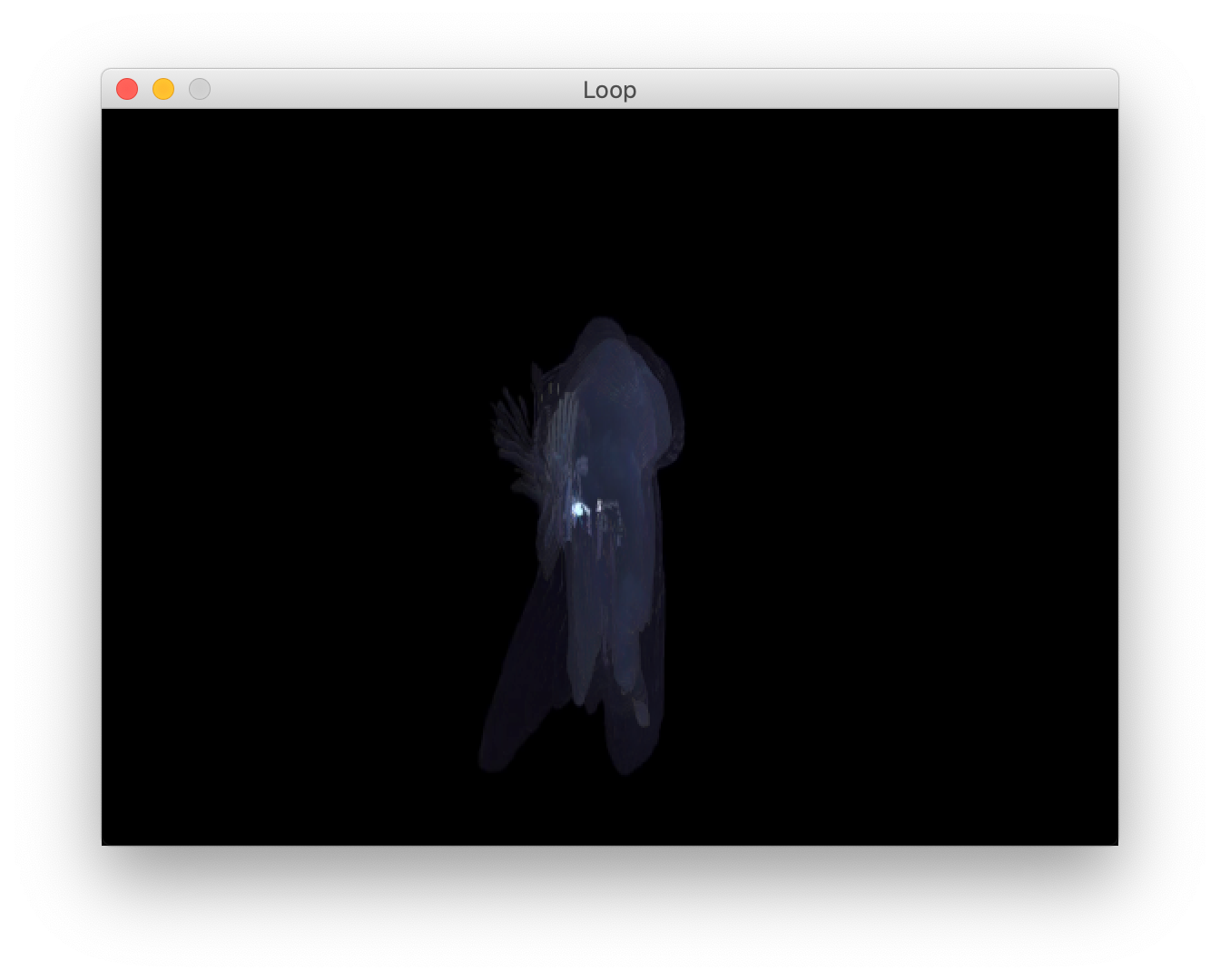

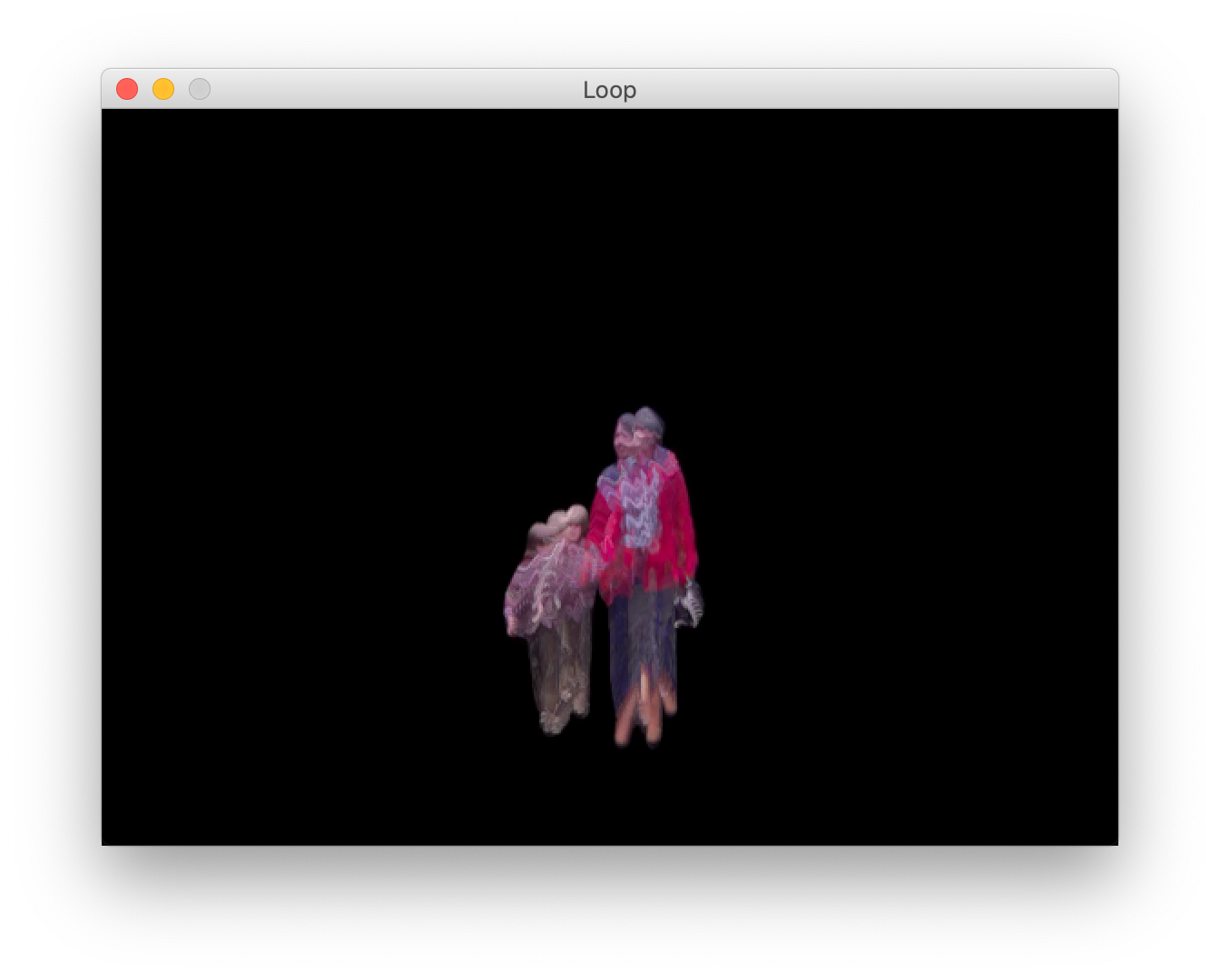

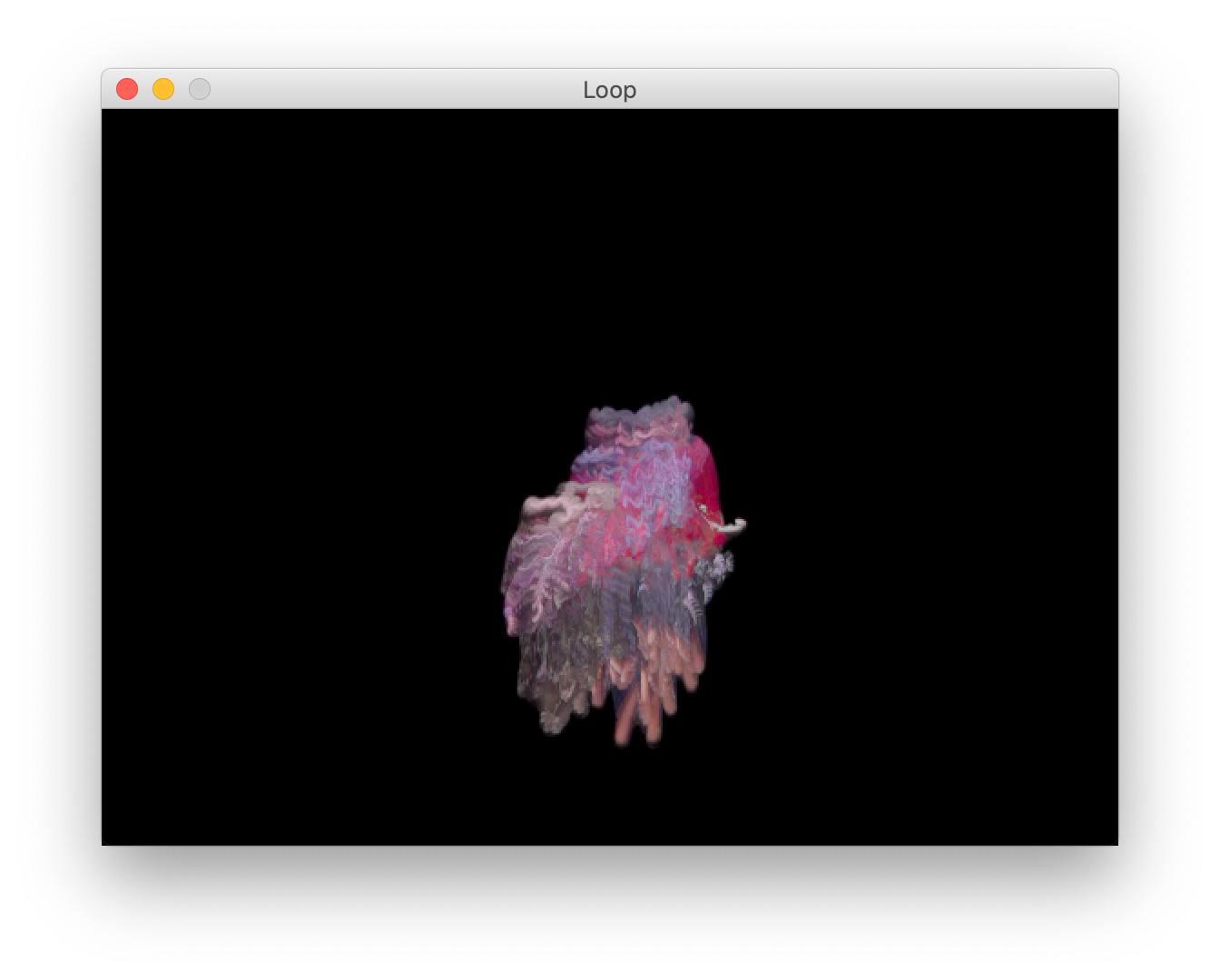

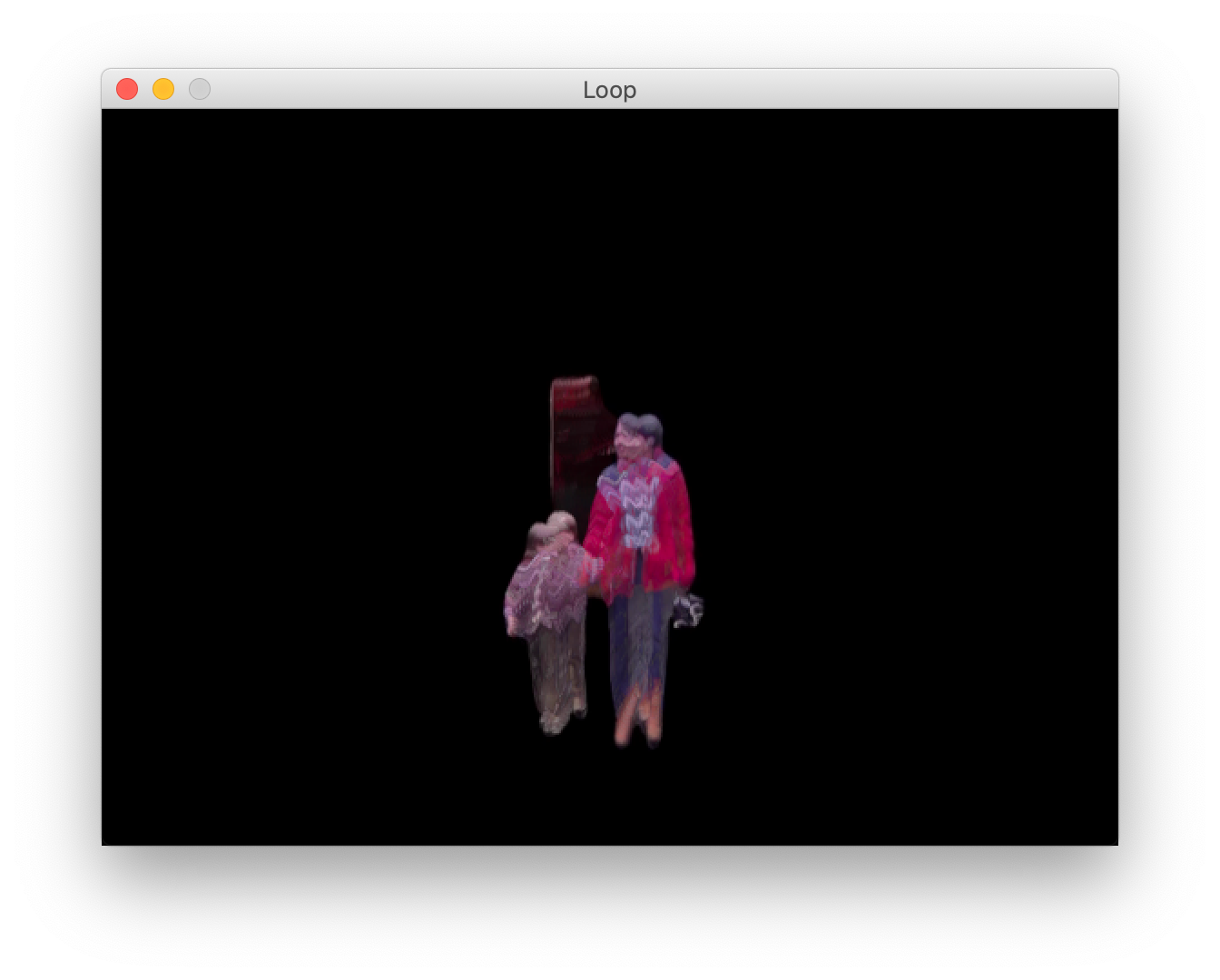

Due to my issues with After Effects I turned to RunwayML a machine learning program that is very user friendly and allows artists and designers to input machine learning into their work. Their new green screen video tool allows users to easily edit foreground from background in videos.

This allowed me to consider my ideas of people inhabiting these spaces in films and how we watch them as voyeurs.